Interactive Demos

I love interactive web-based demos for explaining new ideas. I made some for my work applying LLMs to cryptography, BioCLIP has a HuggingFace demo and my (recent, at the time of writing) work on sparse autoencoders for vision has a bunch of demos.

Beyond demos as a form of scientific communication, I build a lot of interactive webapps for my own research, to convince myself of ideas or to provide intuition to collaborators. I wanted to share my process for developing interactive webapps, with links to specific technologies based on how much effort you want to invest, how much control over the experience you want and how much public compute you have. Hopefully this will help you choose and implement the right type of interactive demo based on your specific research needs and technical constraints.

Table of Contents

When to Build a Demo

Not every research project needs a demo. If you are developing a new optimization algorithm whose claim to fame is needing only half the optimization steps compared to Adam, a demo is not needed. Or if you have a novel attention variant that reduces memory costs by 20%, don’t bother with a demo.

Demos, to me, are useful when you introduce a qualitative shift that is not well-captured by existing quantitative measures. What are examples of this? Consider these three scenarios where interactive demos can be particularly impactful:

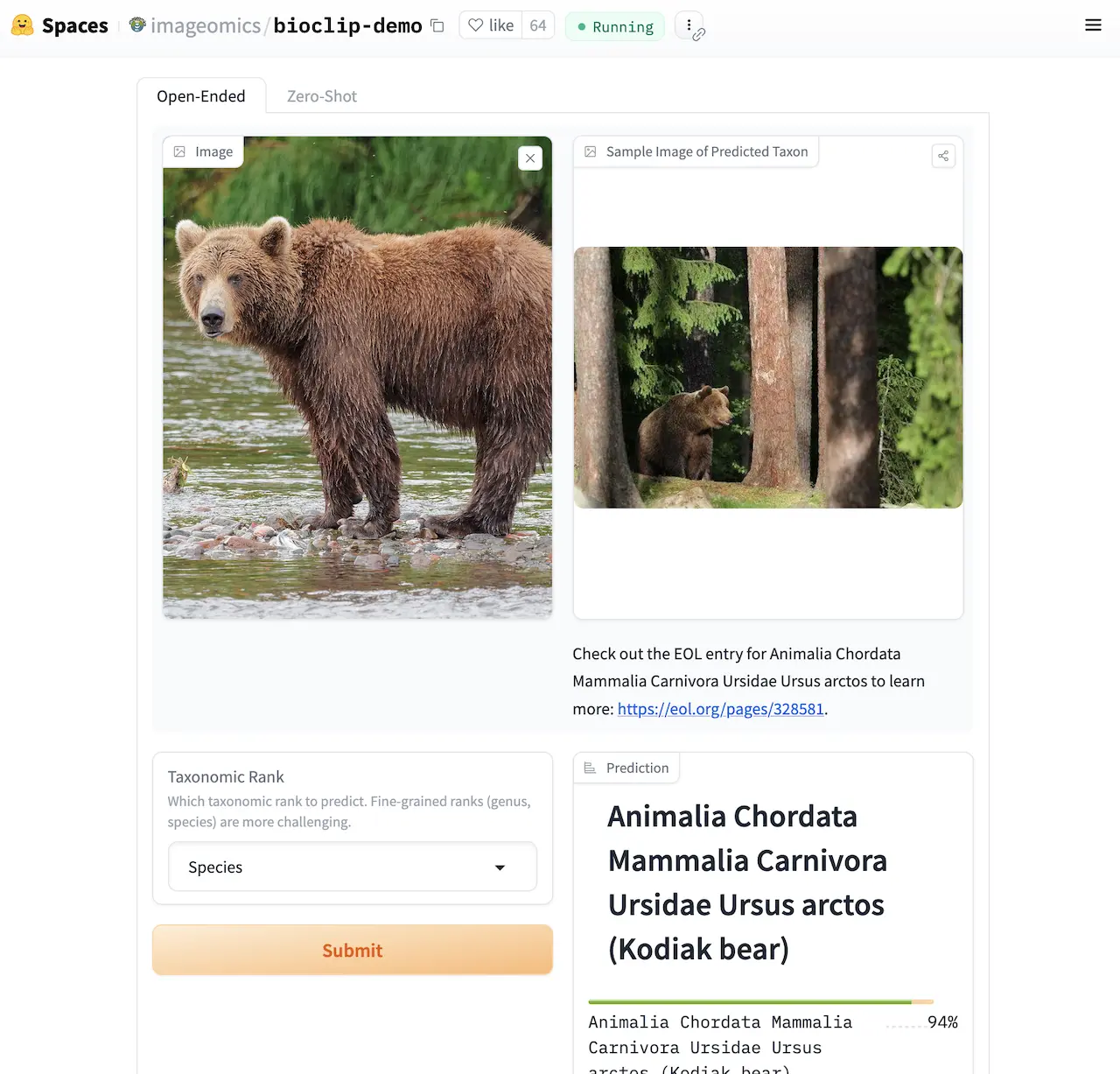

Novel capabilities like zero-shot species classification (like BioCLIP), few-shot prompting (like GPT-3) zero-shot semantic segmentation (like SAM and SAMv2) or manipulating internal representations (like sparse autoencoders) often require a demo to properly express just how amazing the capability is. While static examples in your hook figure can make a big difference, giving potential users the chance to play with your new system on their own without any barrier to entry (downloading code, setting up an environment) can completely change their perspective.

This was especially apparent to me while doing field work in Hawaii. Watching biologists use the BioCLIP and SAMv2 demos to iteratively develop their understanding of model capabilities in real time and then using that understanding to shape their study design was phenomenal. I can only imagine how different their research would have looked without a working BioCLIP demo. This was a highly impactful experience for me that shifted the way I think about online demos.

Qualitative shifts in quantitative metrics like inference speed or data efficiency often benefit from a demo, where possible, to give potential users a concrete feel for how dramatically improved your method is. For example, Mistral’s recent Le Chat is powered by Cerebras chips and gets over 1.1K tokens/sec, which is 10x faster than other assistants. It’s shocking to see how quickly Mistral can write code and responses and really must be experienced to truly understand.

See my notes on performance leading to qualitative shifts in workflows for a litte discussion on this.

Hard-to-understand ideas like symmetric encryption or manipulating ViT internal representations can benefit from demos to help potential users build intuition around your new, poorly-understood idea. This is often why I build demos, even for myself. I want to move things around with my hands and see how the model responds. My internal dialogue normally goes “What if I do this?” followed by “What if I do that?” followed by “Ohhhhhh.” Demos can help your users do the same thing (I think).

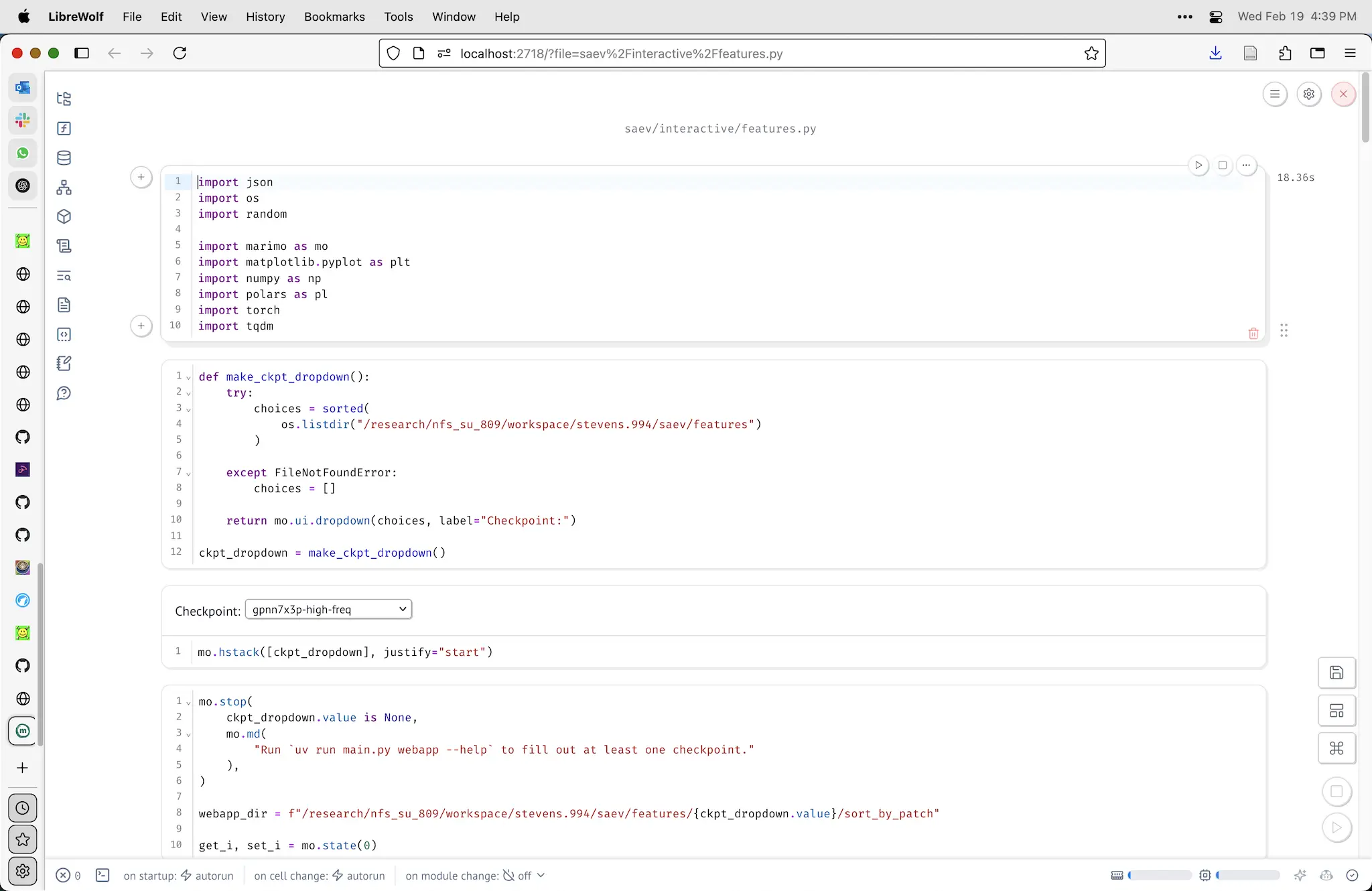

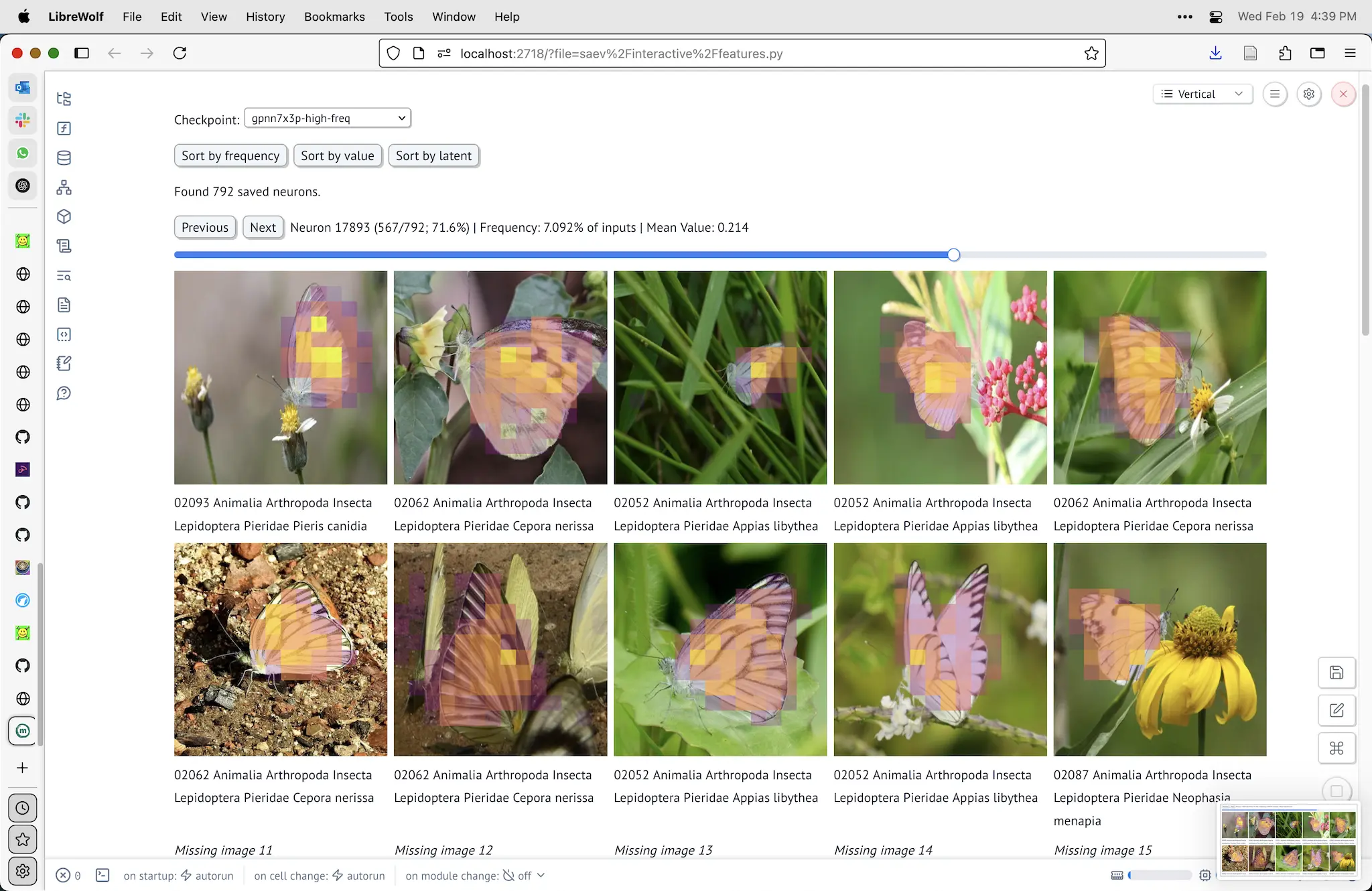

Marimo

Marimo is a modern take on Jupyter notebooks that are reactive: whenever you change a cell, all other cells that depend on that cell’s values automatically update. This enables you to build webapps really quickly without too much effort. I used this to explore individual features in sparse autoencoders. Below you can see a screenshot of the Marimo editor and what the dashboard looks like while I’m using it.

One of the downsides of Marimo is that it needs to run Python on a computer somewhere. This is not great if you want to share a particular notebook with someone else to use themselves and your notebook uses a lot of hard-to-install dependencies (like PyTorch) or it needs a lot of compute like a GPU or a huge hard drive.

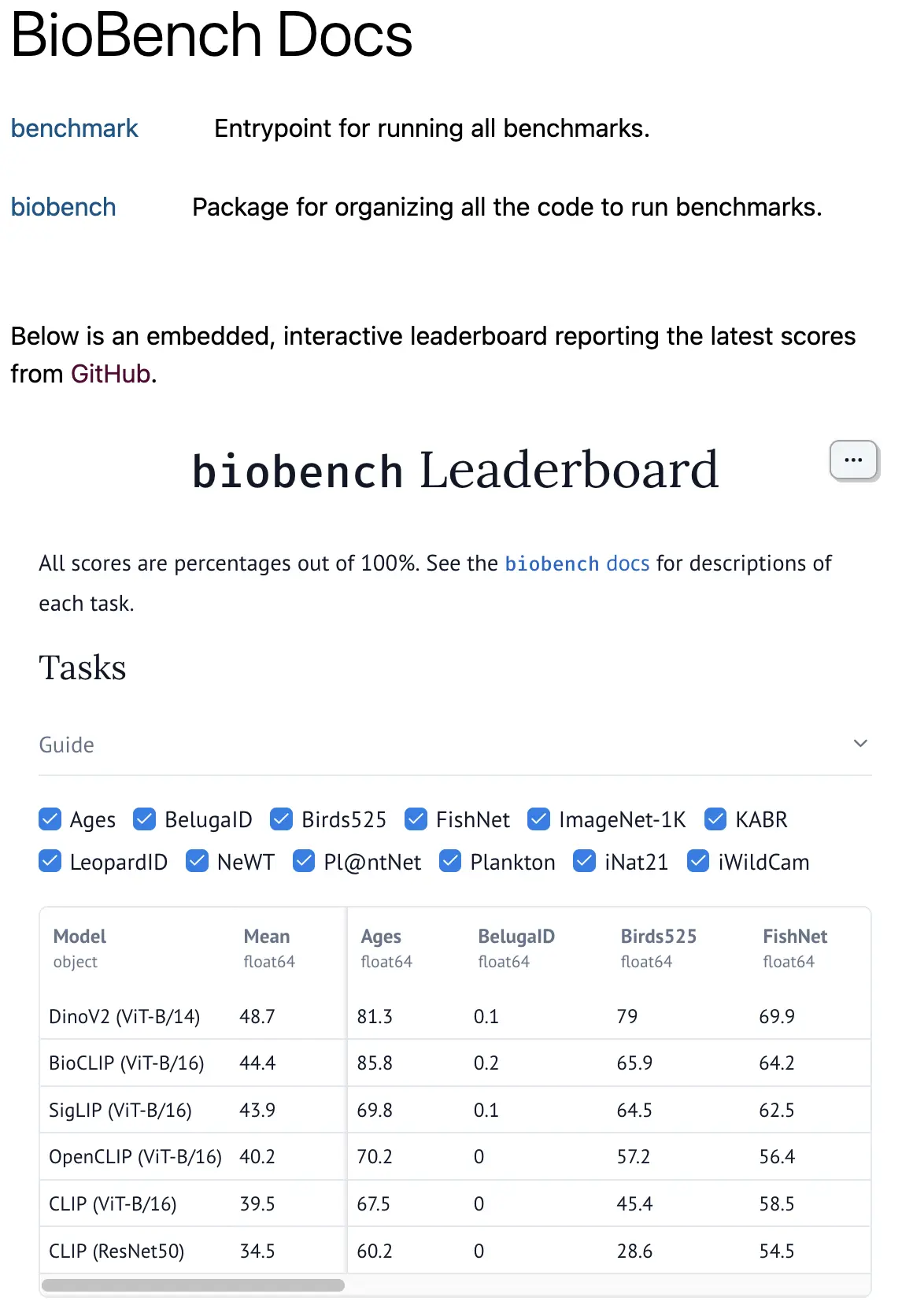

This can be mitigated by being able to permalink to

online notebooks but this limits you to only WebAssembly-compatible

packages, and no disk space. I use this technique to make an interactive leaderboard

for BioBench.

You can see the leaderboard code in the leaderboard itself. It just

grabs the latest

results.csv and shows it as a Pandas dataframe.

Gradio

If you want to (1) run real Python code and (2) share it with someone and (3) not ask them to install Python, then Gradio via HuggingFace Spaces is probably the easiest way to do it. You write a Gradio app using the Gradio Python library. You can install any Python package and run any Python code that you write. Then, HuggingFace will host this app for free on a little 1 vCPU VM.

The downsides of this approach are that your app has to fit within the Gradio library. This can be fine for a lot of ML demos: specify some combination of text, image and audio input, run it through a deep neural network, then get some text, image and audio output back. The BioCLIP demo is exactly that: give it an image and a list of possible classes, get a probability distribution over those classes back. All for free, from any device.

JavaScript

If you want to build truly custom web-based interactive ~experiences~ then you probably need to write some JavaScript yourself. If you have not written JavaScript-based frontends before, this is not necessarily a trivial task. JavaScript mostly sucks and there are various efforts to make it better, but it mostly sucks.

I use Elm for many web-based demos ([1], [2], [3]), but have also written my fair share of vanilla JavaScript ([1], [2], [3]).

I do not want to get into “how to do web dev” because there are smarter people than me out there for that. The trick I want to describe in this post is how to use Gradio with HuggingFace Spaces as a free Python backend API server.

Basically, Gradio apps are queryable via HTTP. There is a JavaScript package available with docs but I wrote a custom Elm package that mimics the functionality using raw HTTP requests. It’s not a great strategy, but you can create a Python-based backend using the free hardware and hosting provided by HuggingFace Spaces, then host your HTML+CSS+JS app using GitHub Pages, and you have an entire stack hosted for free! This is great if you’re a grad student.

Summary

| Marimo | Gradio | JavaScript | |

|---|---|---|---|

| Setup Difficulty | Low | Medium | High |

| Customizability | Medium | Low-Medium | High |

| Requirements | Python environment, or limited web-only version | Free on HuggingFace Spaces (1 vCPU) | Static hosting (GitHub Pages) + optional backend |

| Language | Python | Python | JavaScript/web development |

| Best For | Quick prototyping, personal research tools, reactive notebooks | ML model demos with standard input/output patterns | Custom interactive experiences with complete design freedom |

| Limitations | Web version limited to WebAssembly-compatible packages | Must fit within Gradio’s component system | Requires web development skills, potentially complex setup |

| Use with ML Models | Direct Python integration | Built specifically for ML demos | Requires API to connect to models |

| Example Use Case | Interactive data exploration, feature visualization | Image classification demo, text generation UI | Complex multi-step visualization, custom interactions |

| Maintenance | Dependent on Marimo’s development | Dependent on Gradio and HuggingFace | More stable long-term with standard web technologies |

Sam Stevens, 2024